In the previous post I installed the AWS CLI in order to aid in accessing S3.

Enabling Instance user data script execution on startup

I would expect the script added to the user data section to execute during the boot up time. Generally this is the case, but in this particular case the script will not execute. The reason for this is that scripts specified in user data is executed once at the initial boot time. Launching a new VMs based on the custom AMI with user data is ignored because of this.

All hope is not lost through... because there is a way to enable user data script execution on boot up.

The Windows instances launched in AWS is packaged with a service called "EC2Config Service" which is used to enable advance feature. For more information refer to

this.

The EC2 Config Service manages a file called "Config.xml" which is located in "

C:\Program Files\Amazon\Ec2ConfigService\Settings" folder. This XML file defines the feature/tasks that are executed at boot time. What I am interested in is the "

Ec2HandleUserData" feature that is the set to "

Disabled" at the moment. I need to set this feature to "

Enabled" so that user data scripts are executed during the next reboot. Once executed this setting is set to "

Disabled" automatically so that scripts are not executed during subsequent reboots.

There is another tag called "

Ec2SetPassword" which resets the password of the instance during boot up. I have enabled this feature too. Going forward each instance will have its own password, which is good for security. Otherwise all the VMs launched using the custom AMI will share the same password. Byproduct of resetting the password is that the user data script executes under the local administrator account permissions. Otherwise the user data script executes under the EC2 Config service user.

The base image of the VM needs to be updated once above changes are made. The following screen capture illustrates the features discussed in the above sections.

|

| Contents of Config.xml file (We are enabling Ec2SetPassword and Ec2HandleUserData features) |

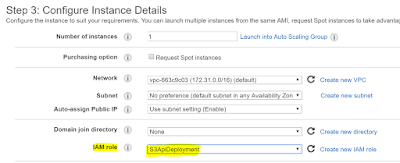

Launching a new VM with boot up scripts

A new VM needs to be launched with the user data scripts. The custom script looks like below:

<powershell>

aws s3 cp s3://simpleapistartup/ c://deployment/ --recursive

cd c:\Deployment

.\ApiService.deploy.cmd /Y -enableRule:DoNotDelete

</powershell>

|

| Adding user data script during launch time |

Once the VM is launched successfully (and both system and instance checks passed), I can simply use the following url to access the API service.

http://ec2-54-194-113-255.eu-west-1.compute.amazonaws.com:88/api/products

The domain name in this case is the EC2 public DNS name, followed by the port number (which is where the service is running). As a note, each EC2 instance has a unique DNS name, which means I need a way to aggregate multiple instances to provide a fault tolerance service.

The EC2 Config service maintains a log of the execution of instance user data script. This is located in "

C:\Program Files\Amazon\Ec2ConfigService\Logs\EC2ConfigLog.txt" file.

|

| Execution of the PowerShell script |

I now can launch multiple VMs with an initial boot up script and access the service without having to setup anything. This is absolutely powerful. What is great about this is that the infrastructure is immutable. In the event an instance crash, I can simply start a new instance.

In the next post I am going to use the AWS SDK to automate the launch process.